Mastering jhsdb: The Hidden Gem for Debugging JVM Issues

Learn how jhsdb, an overlooked tool, transforms JVM debugging with insights into performance analysis and core dump understanding.

jhsdb is a relatively underexplored yet incredibly powerful tool for debugging JVM issues. Whether you're tackling native code that crashes the JVM or delving into complex performance analysis, understanding how to use jhsdb effectively can be a game-changer in your debugging arsenal.

As a side note, if you like the content of this and the other posts in this series check out my Debugging book that covers this subject. If you have friends that are learning to code I'd appreciate a reference to my Java Basics book. If you want to get back to Java after a while check out my Java 8 to 21 book.

Introduction

Java 9 introduced many changes, with modules as the highlight. However, among these significant shifts, jhsdb didn’t get the attention it deserved. Officially, Oracle describes jhsdb as a Serviceability Agent tool, part of the JDK aimed at snapshot debugging, performance analysis, and offering deep insights into the Hotspot JVM and Java applications running on it. Simply put, jhsdb is your go-to for delving into JVM internals, understanding core dumps, and diagnosing JVM or native library failures.

Getting Started with jhsdb

To begin we can invoke:

$ jhsdb --help

clhsdb command line debugger

hsdb ui debugger

debugd --help to get more information

jstack --help to get more information

jmap --help to get more information

jinfo --help to get more information

jsnap --help to get more information

This command in reveals that jhsdb includes six distinct tools:

debugd: A remote debug server for connecting and diagnosing remotely.

jstack: Provides detailed stack and lock information.

jmap: Offers insights into heap memory.

jinfo: Displays basic JVM information.

jsnap: Assists with performance data.

Command Line Debugger: Although there's a preference for the GUI, we'll focus on GUI Debugging for a more visual approach.

Let's dive into these tools and explore how they can aid in diagnosing and resolving JVM issues.

Understanding and Using debugd

debugd might not be your first choice for production environments due to its remote debugging nature. Yet, it could be valuable for local container debugging. To use it we first need to detect the JVM process ID (PID) which we can accomplish using the jps command. Unfortunately, because of a bug in the UI you can’t currently connect to a remote server via the GUI debugger. I could only use this with command-line tools such as jstack (discussed below).

With the command:

jhsdb debugd --pid 1234

We can connect to the process 1234. We can then use a tool like jstack to get additional information:

jhsdb jstack --connect localhost

Notice that the --connect argument applies globally and should work for all commands.

Leveraging jstack for Thread Dumps

jstack is instrumental in generating thread dumps, crucial for analyzing stack processes in user machines or production environments. This command can reveal detailed JVM running states, including deadlock detection, thread statuses, and compilation insights.

Typically we would use jstack locally which removes the need for debugd:

$ jhsdb jstack --pid 1234

Attaching to process ID 1234, please wait...

Debugger attached successfully.

Server compiler detected.

JVM version is 11.0.13+8-LTS

Deadlock Detection:

No deadlocks found.

"Keep-Alive-Timer" #189 daemon prio=8 tid=0x000000011d81f000 nid=0x881f waiting on condition [0x0000000172442000]

java.lang.Thread.State: TIMED_WAITING (sleeping)

JavaThread state: _thread_blocked

- java.lang.Thread.sleep(long) @bci=0 (Interpreted frame)

- sun.net.www.http.KeepAliveCache.run() @bci=3, line=168 (Interpreted frame)

- java.lang.Thread.run() @bci=11, line=829 (Interpreted frame)

- jdk.internal.misc.InnocuousThread.run() @bci=20, line=134 (Interpreted frame)

"DestroyJavaVM" #171 prio=5 tid=0x000000011f809000 nid=0x2703 waiting on condition [0x0000000000000000]

java.lang.Thread.State: RUNNABLE

JavaThread state: _thread_blocked

This snapshot can help us infer many details about how the application acts locally and in production.

Is our code compiled?

Is it waiting on a monitor?

What other threads are running and what are they doing?

Heap Memory Analysis with jmap

For a deep dive into RAM and heap memory, jmap is unmatched. It displays comprehensive heap memory details, aiding in GC tuning and performance optimization. Particularly useful is the histo flag for identifying potential memory leaks through a histogram of RAM usage.

Typical usage of jmap is very similar to jstack and other tools mentioned in this post:

$ jhsdb jmap --pid 1234 --heap

Attaching to process ID 1234, please wait...

Debugger attached successfully.

Server compiler detected.

JVM version is 11.0.13+8-LTS

using thread-local object allocation.

Garbage-First (G1) GC with 9 thread(s)

Heap Configuration:

MinHeapFreeRatio = 40

MaxHeapFreeRatio = 70

MaxHeapSize = 17179869184 (16384.0MB)

NewSize = 1363144 (1.2999954223632812MB)

MaxNewSize = 10305404928 (9828.0MB)

OldSize = 5452592 (5.1999969482421875MB)

NewRatio = 2

SurvivorRatio = 8

MetaspaceSize = 21807104 (20.796875MB)

CompressedClassSpaceSize = 1073741824 (1024.0MB)

MaxMetaspaceSize = 17592186044415 MB

G1HeapRegionSize = 4194304 (4.0MB)

Heap Usage:

G1 Heap:

regions = 4096

capacity = 17179869184 (16384.0MB)

used = 323663048 (308.6691360473633MB)

free = 16856206136 (16075.330863952637MB)

1.8839668948203325% used

G1 Young Generation:

Eden Space:

regions = 66

capacity = 780140544 (744.0MB)

used = 276824064 (264.0MB)

free = 503316480 (480.0MB)

35.483870967741936% used

Survivor Space:

regions = 8

capacity = 33554432 (32.0MB)

used = 33554432 (32.0MB)

free = 0 (0.0MB)

100.0% used

G1 Old Generation:

regions = 4

capacity = 478150656 (456.0MB)

used = 13284552 (12.669136047363281MB)

free = 464866104 (443.3308639526367MB)

2.7783193086322986% used

In most cases this might seem like gibberish but when we experience GC thrashing this might be a secret weapon in your arsenal. You can use this to fine tune GC settings and determine the right parameters to set. Since this can easily run in production you can base this on real world observations.

If you could reproduce a memory leak but you don’t have a debugger attached, you can use this to generate a memory histogram:

$ jhsdb jmap --pid 1234 --histo

Attaching to process ID 72640, please wait...

Debugger attached successfully.

Server compiler detected.

JVM version is 11.0.13+8-LTS

Iterating over heap. This may take a while...

Object Histogram:

num #instances #bytes Class description

--------------------------------------------------------------------------

1: 225689 204096416 int[]

2: 485992 59393024 byte[]

3: 17221 23558328 sun.security.ssl.CipherSuite[]

4: 341376 10924032 java.util.HashMap$Node

5: 117706 9549752 java.util.HashMap$Node[]

6: 306720 7361280 java.lang.String

7: 12718 6713944 char[]

8: 113884 5466432 java.util.HashMap

9: 64683 4657176 java.util.regex.Matcher

10: 95612 4615720 java.lang.Object[]

11: 106233 4249320 java.util.HashMap$KeyIterator

12: 16166 4090488 long[]

13: 126977 4063264 java.util.concurrent.ConcurrentHashMap$Node

14: 150789 3618936 java.util.ArrayList

15: 130167 3546016 java.lang.String[]

16: 156237 3227152 java.lang.Class[]

17: 33145 2916760 java.lang.reflect.Method

18: 32193 2575440 nonapi.io.github.classgraph.fastzipfilereader.FastZipEntry

19: 17314 2051672 java.lang.Class

20: 32043 1794408 io.github.classgraph.ClasspathElementZip$1

21: 107918 1726688 java.util.HashSet

22: 105970 1695520 java.util.HashMap$KeySet

This can help narrow down the source of the issue. There are better tools for that in the IDE and during development. But if you're running a server even locally, it can instantly give you a snapshot of RAM.

Basic JVM Insights with jinfo

Though not as detailed as other commands, jinfo is useful for a quick glance at system properties and JVM flags, especially on unfamiliar machines. It's a straightforward tool that requires just a PID to function.

jhsdb jinfo --pid 1234

Performance Metrics with jsnap

jsnap offers a wealth of internal metrics and statistics, such as thread counts and peak numbers. This data is vital for fine-tuning aspects like thread pool sizes, directly impacting production overhead.

$ jhsdb jsnap --pid 72640

Attaching to process ID 72640, please wait...

Debugger attached successfully.

Server compiler detected.

JVM version is 11.0.13+8-LTS

java.threads.started=418 event(s)

java.threads.live=12

java.threads.livePeak=30

java.threads.daemon=8

java.cls.loadedClasses=16108 event(s)

java.cls.unloadedClasses=0 event(s)

java.cls.sharedLoadedClasses=0 event(s)

java.cls.sharedUnloadedClasses=0 event(s)

java.ci.totalTime=23090159603 tick(s)

java.property.java.vm.specification.version=11

java.property.java.vm.specification.name=Java Virtual Machine Specification

java.property.java.vm.specification.vendor=Oracle Corporation

java.property.java.vm.version=11.0.13+8-LTS

java.property.java.vm.name=OpenJDK 64-Bit Server VM

java.property.java.vm.vendor=Azul Systems, Inc.

java.property.java.vm.info=mixed mode

java.property.jdk.debug=release

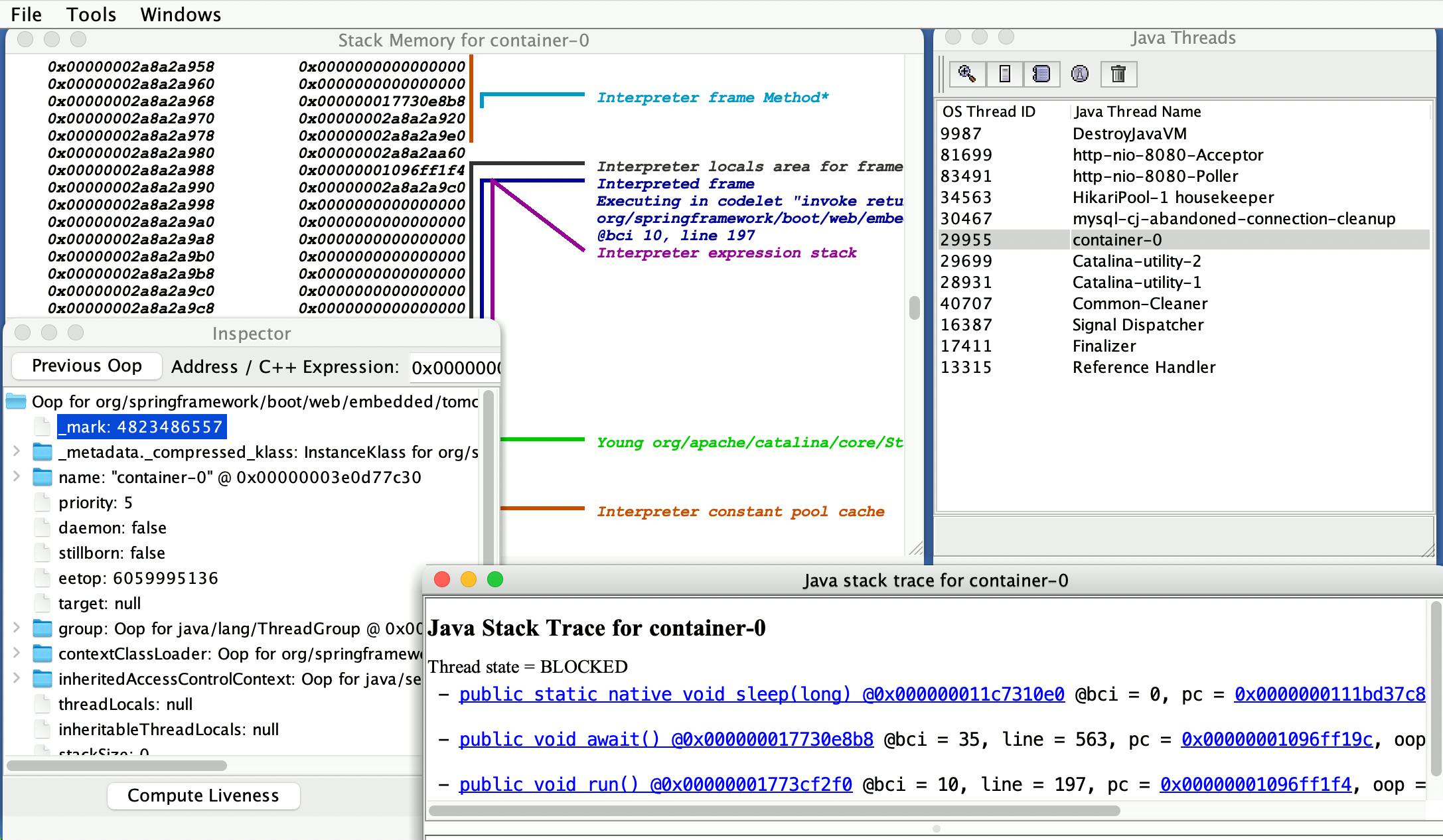

GUI Debugging: A Visual Approach

We'll skip over the CLI debugger, the GUI debugger deserves a mention for its user-friendly interface, allowing connections to core files, servers, or PIDs with ease. This visual tool opens up a new dimension in debugging, especially when working with JNI native code.

The GUI debugger can be launched just like any other of the jhsdb tools:

jhsdb hsdb --pid 1234

The GUI layout is designed for ease of navigation, offering a comprehensive view of JVM internals at a glance. Here are some key features and how to use them:

File Menu: This is your starting point for connecting to debugging targets. You can load core files for post-mortem analysis, attach to running processes to diagnose live issues, or connect to remote debug servers if you’re dealing with distributed systems.

Threads and Monitors: The GUI provides a real-time view of thread states, making it easier to identify deadlocks, thread contention, and monitor locks. This visual representation simplifies the process of pinpointing concurrency issues that could be affecting application performance.

Heap Summary: For memory analysis, the GUI debugger gives a graphical overview of heap usage, including generations (for GC analysis), object counts, and memory footprints. This makes identifying memory leaks and optimizing garbage collection strategies more intuitive.

Method and Stack Inspection: Delving into method executions and stack frames is seamless, allowing you to trace the execution path, inspect local variables, and evaluate the state of the application at different points in time.

Final Word

jhsdb stands out as an essential tool in the debugging toolkit, especially for those dealing with JVM and native code issues. Its range of capabilities, from deep memory analysis to performance metrics, makes it a versatile choice for developers and system administrators alike.

The biggest benefit is in debugging the interaction between Java code and native code. Such code often fails in odd ways and on end user machines. In such situations a typical debugger might not be the best tool and might not expose the whole picture. This is especially true if you get a JVM core dump which is the main use case for jhsdb.